Preliminary benchmarking results for a Haskell I/O manager backend based on io_uring

Haskell provides lightweight user space threads that get scheduled by the Run Time System (RTS) onto a limited number of operating threads in a so-called M:N threading model. To handle blocking there are a few options, about which I have written before. For system calls on file descriptors that can return EAGAIN or EWOULDBLOCK, the RTS provides an I/O (not to be confused with IO) event manager where threads can register interest in a file descriptor before putting themselves into a sleeping state. If and when the file descriptor becomes available for reading or writing, the event manager will wake up any threads that have registered interest in it. The event manager system contains a manager module, which provides the interface for the rest of the Haskell code. This module will choose the most performant option from a number of backends available on the current system. So, it will choose the epoll backend on most Linux systems, the kqueue backend on BSD systems, etc. This article is about the design and first results of a new event manager backend based on the io_uring interface.

Side note: quite recently a native I/O manager for Windows systems was merged into GHC. Sadly, I don’t know enough about it yet to meaningfully include it into this article. As such, the information provided is only valid for POSIX-like systems.

The io_uring interface was added in the Linux kernel version 5.1 as an improvement to the earlier aio interface for asynchronous system calls. It operates by allocating two ring buffers that are shared between the kernel and the userspace program, known as the Submission Queue (SQ) and the Completion Queue (CQ). The program puts any number of system call requests (known as Submission Queue Entries (SQEs)) into the SQ and can submit any number of them to the kernel with a single system call. The kernel then goes off to do the things requested and when (or if) the request is complete the result will be put into the CQ as a Completion Queue Entry (CQE). This means that the userspace program can check if any CQEs are available merely by checking the CQ, without having to do any system calls. This potentially saves a lot of work on context switches between the kernel and userspace for I/O heavy programs like web servers. As of the time of writing, there are SQEs available for file reading/writing, accepting network connections, polling file descriptors and several more. The author of the io_uring interface has stated that he intends to eventually provide asynchronous versions of all system calls where it makes sense. To implement an I/O event manager backend with io_uring, we only need the SQEs for polling and cancelling previous poll requests, but it would be interesting to see how much of io_uring could be leveraged to provide “true” asynchronous system calls in the future.

IoUring backend design

Any I/O event manager backend must conform to the interface defined for it, which looks like this:

data Backend = forall a. Backend {

_beState :: !a

-- | Poll backend for new events. The provided callback is called

-- once per file descriptor with new events.

, _bePoll :: a -- backend state

-> Maybe Timeout -- timeout in milliseconds

-> (Fd -> Event -> IO ()) -- I/O callback

-> IO Int

-- | Register, modify, or unregister interest in the given events

-- on the given file descriptor.

, _beModifyFd :: a

-> Fd -- file descriptor

-> Event -- old events to watch for ('mempty' for new)

-> Event -- new events to watch for ('mempty' to delete)

-> IO Bool

-- | Register interest in new events on a given file descriptor, set

-- to be deactivated after the first event.

, _beModifyFdOnce :: a

-> Fd -- file descriptor

-> Event -- new events to watch

-> IO Bool

, _beDelete :: a -> IO ()

}That seems pretty reasonable, only four functions! Actually, there is also a new function to set up the backend so it’s more like five functions. ACTUALLY actually, the _beModifyFd function has three different modes of operation depending on which of its arguments are mempty. It can be either “add a polling request”, “modify a polling request” or “delete a polling request” so it is more like seven functions. Still, that is quite manageable. The interface description even describes what each function should do and which arguments it takes, which is more than I usually got in my previous day job.

Luckily, Ben Gamari had already written Haskell bindings for using io_uring that could be adapted for an I/O manager backend. He was also a huge help in finding a segfault-causing bug that I would never have caught myself. Many thanks!

An interesting implementation detail of io_uring is that every SQE has a userdata field that gets copied over verbatim into the CQE for its completion. As we can see in the type signature for _bePoll we need both the original file descriptor and the events to issue the callback. In the earliest version, the new backend generated a unique Int for each request and stored this information (along with a Bool indicating whether the poll request was a “multishot” or not) in a hashtable. This worked, but testing showed that throughput was only about half of what the epoll backend could provide. After some discussion on the #ghc IRC channel, we realized that it was possible to bitpack all the required data into the 64-bit userdata field. This cleared up the code significantly and provided a nice speed boost as well.

The code of the new backend can be found over here. At its heart, it is a popAndCallbackAllCQEs function. It is implemented as follows:

-- The workhorse of this backend. Pops CQEs off the ringbuffer

-- and issues callbacks to waiting threads

popAndCallbackAllCQEs :: UringState -> (Fd -> EI.Event -> IO ()) -> Int -> IO Int

popAndCallbackAllCQEs state callback !poppedSoFar = do

maybeCQE <- withMVar (ringLock state) $ \ring -> popCq ring

case maybeCQE of

Nothing -> return poppedSoFar

Just cqe -> do

let (fd,originalEvents,isMultishot,hasCallback)

= (deconstructUserdata $ cqeUserData cqe)

when (hasCallback) $ callback fd (convertEventBack $ cqeRes cqe)

-- if it was a multishot poll, resubmit. If it was an error,

-- don't resubmit to prevent getting into an infinite loop of errors

when (isMultishot && cqeRes cqe >= 0) $ do

void $ registerAndPostPollRequest state fd originalEvents True

popAndCallbackAllCQEs state callback $

if hasCallback then (poppedSoFar + 1) else poppedSoFarThis function pops CQEs off the CQ and after un-bitpacking the userdata field of the CQE, performs the callback. It then recurses until popCq returns Nothing, indicating that there are no more unread CQEs to be consumed. Even in this small bit of code it’s already clear how many edge cases can occur. Some backend-internal CQEs don’t have an associated callback, so for this case we should skip that. If a multishot poll request fails, blindly resubmitting might result in an infinite loop of erroring poll requests.

Test results

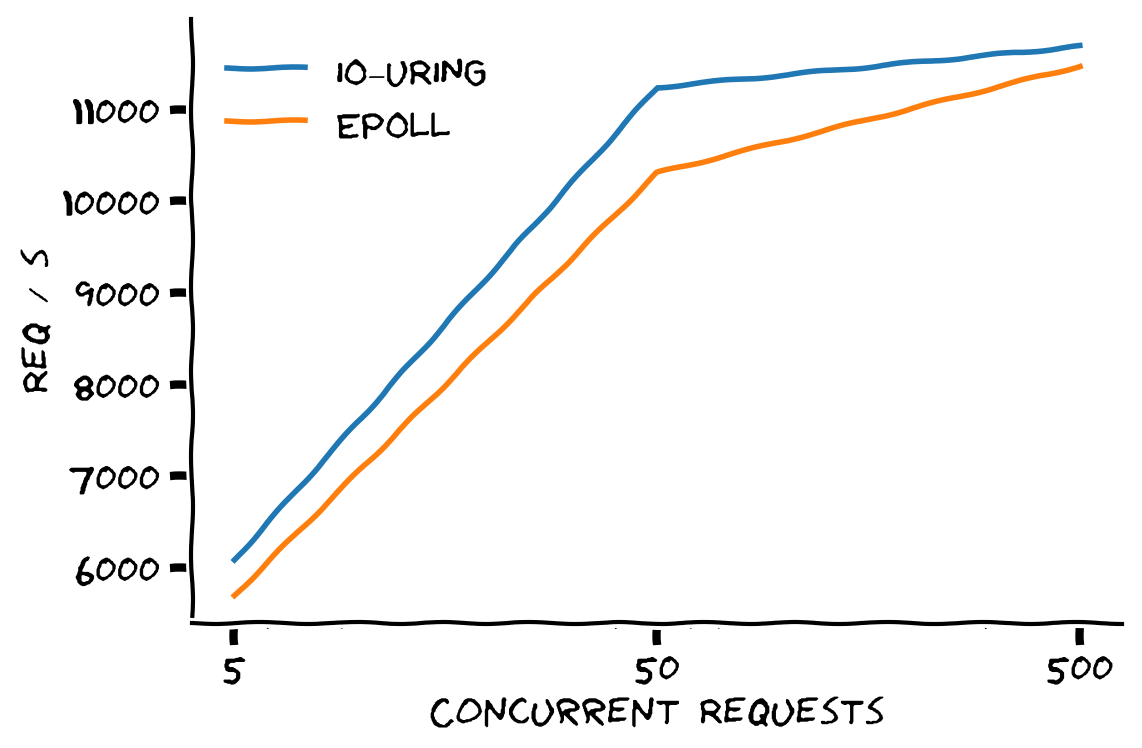

To test the relative performance of the io_uring backend compared to the epoll backend, I needed an application that spends as much of its time as possible on pollable I/O. The network package provides sample code for a simple echo server which I adapted into an extremely basic HTTP server. This program was compiled twice: once with the epoll backend and once with the io_uring backend. Then two t3.xlarge VMs with 4 CPU cores and 16 GB of RAM were acquired from AWS, one to host the test programs and one from which to run the ab HTTP load generator. I ran ab against each program in batches of 10.000 requests at concurrency levels of 5, 50 and 500 concurrent requests. To average out any jitter, each test run was performed three times and the results averaged.

As you can see, the io_uring backend provides about 7% more requests per second (in this toy benchmark) at 5 concurrent requests, about 9% more requests per second at 50 concurrent requests and about 1.8% more at 500 concurrent requests. Presumably, at very high levels of concurrent requests other bottlenecks start to dominate performance. After sharing these findings in the #ghc IRC channel, I was advised to increase the size of the GC nursery to 16 MB (up from the default of 1 MB) and track the RTS performance statistics with the -s RTS option.

It turned out that at low concurrency the backends performed much the same, but at higher concurrency levels the epoll backend started spending much more time in garbage collection than the io_uring backend. This was mostly caused by the amount of retained objects being much larger for epoll, and as we all know GC time is related to heap size in Haskell. In one particular case, epoll had a maximum residency of no less than 1,021,622,432 bytes (almost a full gigabyte) while io_uring reported a maximum residency of only 1,553,680 bytes, a reduction in memory use of almost 98.5%! This also resulted in GC pauses of almost 200 ms for the epoll backend to traverse all that memory, while the io_uring backend reported a maximum GC pause time of only 12 ms. Since most programs will also do other things than just report a single in-memory string back to callers this result is unlikely in “the real world”, but it is still remarkable. I suspect that the difference is caused by the epoll() syscall returning all the available file descriptors in a big array that the backend then forM_s over to perform the callbacks. The io_uring backend on the other hand can pop CQEs off the CQ one at a time and reclaim the memory for that CQE almost immediately afterwards.

As a final observation, using the new non-moving garbage collector reduced the memory use of the epoll backend to about 380 MB and increased io_uring memory use to about 10 MB. GC pauses went down to ~30 ms for the epoll backend and ~4 ms for the io_uring backend. If you have a service serving a lot of network requests using the epoll backend, it definitely seems worthwhile to test out the non-moving GC.

Summary

As an interface, io_uring seems to be the future of asynchronous system calls on Linux. Eventually, we hope to leverage it for much more than the limited “polling only” scope needed for an I/O event manager and perhaps even provide an “async manager” next to the current timer and I/O event managers. However, the interface is still under active development and is only available on fairly modern linux versions. For example, the first Ubuntu LTS version with adequate io_uring support to run the event manager described in this article is scheduled for release in about a week from the time of writing. As such, users that are for any reason prevented from upgrading to a recent Linux kernel will not be able to use the new backend.

At the moment, the io_uring event manager only lives in a separate branch and requires manual editing of GHC source files to be enabled. The next step will be to add an RTS flag to enable the io_uring backend if supported, similar to the --nonmoving-gc flag for enabling the concurrent garbage collector. This way, users can easily test out the new event manager to see if it brings benefits for their particular use case and equally easily revert back if it does not. Hopefully, enabling users to test the new backend in a variety of settings will provide more insight into the exact performance tradeoffs it brings. The test program used to generate the above results was constructed specifically to avoid “business logic” and spend as much time as possible in the I/O event manager, so we should not read too much into these results. Still, io_uring seems quite promising and I’m excited to see it evolve.